Research Projects

| Current Projects | ||

|

DeepPTL - Lebenslanges Lernen mit flexiblen Datenmodalitäten für die Produktions-, Transport- und Logistikautomation

Dieses Projekt verfolgt das Ziel, neue wissenschaftliche Methoden zur maschinellen Analyse und Auswertung von verfügbaren Daten zu schaffen. Im Fokus steht dabei die Entwicklung neuer Methoden des maschinellen Lernens, insbesondere des tiefen Lernens (Deep Learning), um bisher manuelle Abläufe zu automatisieren und bereits automatisierte Abläufe zuverlässiger, effizienter und flexibler zu gestalten. Aus den aktuellen wissenschaftlichen Herausforderungen im maschinellen Lernen sollen im Rahmen dieses Forschungsprojektes vier Problemstellungen detailliert betrachtet werden, die neben dem hohen wissenschaftlichen Anspruch eine breite Nutzbarkeit bei verschiedensten aktuellen industriellen Problemstellungen versprechen. Dies sind im speziellen Methoden für „Lebenslanges Lernen“, „hohe Variabilität der Eingangsvektoren“, „Schätzung der Systemunsicherheiten“ sowie die „Skalierbarkeit auf Embedded-Plattformen“. Der Nutzen durch die hier entwickelten Methoden soll zudem innerhalb des Projektes anhand von ausgesuchten Anwendungsszenarien aus den stark wachsenden Bereichen Produktion, Transport und Logistik gezeigt werden. Contact person: Wolfram Burgard | ||

|

KaLiMU - Hochintegrierte Kamera-Lidar-Sensorik für mobile Robotikanwendüngen

Die Fähigkeit, hochaufgelöste, dreidimensionale Umgebungsmodelle auch unter erschwerten Bedingungen erfassen zu können, ist eine Grundvoraussetzung für Innovationen im Bereich der Robotik. Die daraus resultierenden Daten ermöglichen eine Vielzahl von Anwendungen wie Navigation, Manipulation, Dokumentation und Monitoring. Im Rahmen des Projektes KaLiMU entwickeln die Projektpartner einen hochintegrierten, multimodalen Sensor zur Umgebungserfassung, welcher auch unter erschwerten Bedingungen, z.B. in belebten Umgebungen mit vielen lichtbrechenden, spiegelnden oder transparenten Objekten, eingesetzt werden kann. Integrierte Sensorsysteme den großen Vorteil, dass eine robuste Kalibrierung des Gesamtsystems bereits durch den Hersteller gewährleistet werden kann. Bei einer hardwarenahen Fusion der Daten des Lidars mit Kameradaten ergeben sich darüber hinaus weitere positive Synergieeffekte im Hinblick auf die fremdlichtinvariante Kolorierung von Umgebungsmodellen und ein Upsampling der dünnbesetzten Tiefeninformationen des Lidar-Moduls. Die Kombination mehrerer verschiedener Sensortypen erleichtert eine frühzeitige Erkennung und Behandlung potenzieller Fehlfunktionen einzelner Sensoren, z.B. bedingt durch Verschmutzung der Sensoreinheit. Dies ist relevant für eine robuste Funktionsweise des Sensorsystems. Zusätzlist ist aus Sicht des Endanwenders ein kompaktes, integriertes Sensorsystem erstrebenswert, welches ohne Spezialkenntnisse über Sensorkalibrierung und Datenfusion verwendbar ist. Im Rahmen des Projektes KaLiMU sollen dazu bestehende Methoden erweitert und an die neuartige Sensorarchitektur angepasst werden. Das Projektkonsortium entwickelt Softwaremodule, die eine robuste, multimodale Kartierung und Lokalisierung (SLAM), eine umgebungsadaptive Datenfusion und die semantische Interpretation der Sensordaten ermöglichen. Contact person: Wolfram Burgard | ||

|

OML - Organic Machine Learning

The goal of the project "Organic Machine Learning" (OML) is to break the conventional, rigid approach of training an deploying of machine learning systems and to develop methods for machine learning which resemble organic learning, especially human learning, where systems learn throughout their entire lifetime - especially during application. The way of learning shall become more organic. Instead of learning on very large, clean and well-structured training data, which have been prepared in a time-consuming manner, the systems developed in OML shall learn from heterogeneous data with little or no preparation, like they occur in real world scenarios, and shall require less training data, like humans do. Different sources, such as interaction with humans and own experience, shall be combined in a multimodal way and learning shall be focused on cases of uncertainty. To achieve this, systems must be able to detect in which cases they are unsure and where further learning is necessary. Furthermore, learning systems should not be a »black box« whose functioning outsiders cannot see into. Instead, they should be able to explain their decision-making and behavior. With the ability to justify their decisions, those system will become more accepted by humans and their application in real world environments possible. Finally, the developed system shall be integrated in a robotic system. In an interactive robot programming scenario, the robot shall learn new skills from scratch by learning from physical, visual and verbal interaction with a human as well as own experience - just how an apprentice is taught by his master. Project Website Contact person: Wolfram Burgard, Oier Mees | |

|

Clearview-3D - Laser-basiertes 3D-Sensorsystem für das autonome Fahren unter schwierigen Wetter- und Sichtbedingungen

Sollen in Zukunft Fahrzeuge vollautonom fahren, sind Systeme gefordert, welche die Umgebung mit hoher Präzision, hoher räumlicher und zeitlicher Auflösung abtasten und auch bei schwierigen Situationen, wie Nebel oder Regen, die notwendige Zuverlässigkeit garantieren. Heute wird dazu eine Fülle von unterschiedlichen Sensoren integriert und kombiniert. Die Sensor- und Datenfusion kann in aktuellen Konfigurationen aber als unzureichend bezeichnet werden, da die einzelnen Datenströme parallel verarbeitet und interpretiert werden. Eine Zusammenführung der Ergebnisse erfolgt in der Prozesskette erst sehr spät. Eine Beschreibung der Umgebung geprägt von Latenz und Unschärfe ist das Ergebnis. Das »ClearView-3D« Projekt verfolgt das Ziel, ein LiDAR-System zu entwickeln, welches einen Großteil der notwendigen Umgebungsdaten, auch unter schwierigen Bedingungen, bei denen heute Radar zum Einsatz kommt, erfassen kann. Zur Ergänzung und Schärfung der durch das LiDAR-System bereitgestellten Entfernungsdaten wird ein zusätzlicher Sensor integriert, der auf dem Prinzip der Speckle-Korrelation beruht. Durch die Auswertung des Kohärenz- und Polarisationsgrades der rückgestreuten Lichtwellen von im Nebel befindlichen Objekten soll eine bessere Identifikation der Objekte erreicht werden. Gekoppelt wird der neue Systemansatz mit einem parallelen Prozess für die Dateninterpretation. Im Rahmen des »ClearView-3D« Projektes wird die Hard- und Software des Messsystems entwickelt und als »Proof-of-Concept« implementiert und unter realen Bedingungen evaluiert. Contact person: Wolfram Burgard | ||

|

Autonomous Street Crossing with Pedestrian Assistant Robots

The goal of this project is to enable pedestrian assistant robots to autonomously navigate street intersections without requiring human intervention. Several software modules will be developed employing multiple sensor modalities that interact with each other to achieve the aforementioned goal. Moreover, the proposed modules, such as terrain classification, dynamic object tracking and lane detection, not only enable autonomous street crossing, they are also crucial for safe autonomous navigation in urban cities. While current state-of-the-art robotic platforms still require a degree of human intervention at street intersections, our goal with this work is to eliminate the need for human assistance, ensure safe operation and socially compliant navigation behavior. In particular, the ability to cope with changing and intersecting traffic lanes in dynamic urban environments will be a novelty developed within this project. Contact person: Wolfram Burgard | ||

|

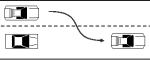

Lernen Kooperativer Trajektorien im Gemischten Verkehr

Der Verkehr der Zukunft wird sich voraussichtlich deutlich von dem heutigen unterscheiden. Derzeit gibt es weltweit ausgedehnte Anstrengungen dazu, den Verkehr durch den Einsatz selbstfahrender Fahrzeuge zu automatisieren. Da es aber für einen langen Zeitraum noch Fahrzeuge geben wird, die nicht selbständig fahren können und es gleichzeitig auch noch Fahrer geben wird, die ihr Fahrzeug gerne selber steuern, muss damit gerechnet werden, dass es zumindest für eine Übergangszeit einen gemischten Verkehr geben wird, der aus selbstfahrenden und von Menschen gesteuerten Fahrzeugen besteht. Der Großteil der aktuellen Forschung zu selbstfahrenden Fahrzeugen befasst sich jedoch mit der Autonomie der einzelnen Fahrzeuge. In diesem Projekt untersuchen wir, wie man effizient die Trajektorien von einer Anzahl von Fahrzeugen in einem Szenario mit gemischtem Verkehr planen und ausführen kann. Insbesondere konzentrieren wir uns auf die die Planung und Ablaufkontrolle an Kreuzungen. Unser Ziel ist es, für hybride Szenarien, in denen automatische und nicht-automatische Fahrzeuge gemeinsam operieren, einen Ablaufplan zu produzieren, der sicher stellt, dass die Fahrzeuge nicht kollidieren und gleichzeitig effizient ihren Zielpunkt erreichen. Contact person: Wolfram Burgard | ||

|

OpenDR - Open Deep Learning toolkit for Robotics

The aim of OpenDR is to develop a modular, open and non-proprietary toolkit for core robotic functionalities by harnessing deep learning to provide advanced perception and cognition capabilities, meeting in this way the general requirements of robotics applications in the applications areas of healthcare, agri-food and agile production. The term toolkit in OpenDR refers to a set of deep learning software functions, packages and utilities used to help roboticists to develop and test a robotic application that incorporates deep learning. OpenDR will provide the means to link the robotics applications to software libraries (deep learning frameworks, e.g., Tensorflow) and to link it with the operating environment (ROS). OpenDR focuses on the AI and Cognition core technology in order to provide tools that make robotic systems cognitive, giving them the ability to a) interact with people and environments by developing deep learning methods for human centric and environment active perception and cognition, b) learn and categorise by developing deep learning tools for training and inference in common robotics settings, and c) make decisions and derive knowledge by developing deep learning tools for cognitive robot action and decision making. As a result, the developed OpenDR toolkit will also enable cooperative human-robot interaction as well as the development of cognitive mechatronics where sensing and actuation are closely coupled with cognitive systems thus contributing to another two core technologies beyond AI and Cognition. OpenDR will develop, train, deploy and evaluate deep learning models that improve the technical capabilities of the core technologies beyond the current state of the art. It will enable a greater range of robotics applications that can be demonstrated at TRL 3 and above, thus lowering the technical barriers within the prioritized application areas. OpenDR aims to an easily adopted methodology to adapt the provided tools in order to solve any robotics task without restricting it to any specific application. Contact person: Wolfram Burgard | ||

|

SORTIE - Sensor Systems for Localization of Trapped Victims in Collapsed Infrastructure

The primary research objective is to improve the detection of trapped victims in collapsed infrastructure with respect to sensitivity, reliability, speed and safety of the responders compared to the current state of the scientific and technical knowledge. Therefore, the project aims to investigate, develop and evaluate a technical system that can augment the process of urban search and rescue and that helps limiting the severe impacts of the disaster scenario. The request for enhanced sensitivity and reliability is the key criterion to find all potential survivors with higher probability, even if they are situated under large layers of debris. This will be allowed by a novel sensing system, improving and combining existing sensing principles such as bioradar, cellphone localization, gas detection sensors and 3D scanners into a single unit. To increase the detection speed of surviving victims, the sensor system shall be integrated into an unmanned aerial vehicle (UAV) with improved ability for autonomous exploration of the disaster site, i.e. the flying platform shall be able to perform automated movements, scans and landing events. The seamless combination of multi-sensor systems and intelligent UAVs will allow the rescue management team to quickly assess the situation in order to initiate salvage procedures as fast as possible. Avoiding direct access to the debris field, hazards threatening the rescuers, such as collapse of construction elements or gas leakage, will be minimized. Contact person: Wolfram Burgard | ||

|

ISA 4.0 - Intelligentes Sensorsystem zur autonomen Überwachung von Produktionsanlagen in der Industrie 4.0

Das Projekt „Intelligente Sensorsysteme zur Autonomen Überwachung von Industrieanlagen“ (ISA) strebt die Erforschung eines mobilen Überwachungssystems für Produktionsanlagen in der chemischen Industrie an. Ziel ist die Unterstützung von Inspekteuren bei Rundgängen bis hin zur erstmaligen vollautonomen Durchführung dieser Tätigkeit. Das Sensorsystem soll hierfür die menschlichen Sinne abbilden, aber auch erweitern und die gewonnenen Informationen autonom mit im Projekt entwickelten Methoden des maschinellen Lernens (ML) auswerten. Den menschlichen Sinnen entsprechen technisch gesehen u.a. Mikrofone, Gassensoren, chemische Sensorik, Bilderfassungssysteme, Vibrationssensoren oder Temperaturfühler. Durch Erweiterung der menschlichen Sinne und enge Kopplung von Hardware- und Algorithmenentwicklung sollen weit effizienter und zuverlässiger als bisher Anlagenausfälle verringert oder vorhergesagt (“Predictive Maintenance”) werden. Dadurch können eine gleichmäßige Produktqualität gewährleistet, Sicherheitsprobleme erkannt, sowie Empfehlungen zur Erhöhung der Effizienz und Resilienz der Anlagen abgeleitet werden. Die Folge sind massive Kosteneinsparungen, die Erhöhung der Sicherheit der Anlage und des Personals und damit die Stärkung des Standortvorteils Deutschland. Im Rahmen des Projekts ist als Test- und Evaluierungsumgebung eine Industrieanlage des Projektpartners EVONIK vorgesehen. Nach erfolgreichem Projektabschluss strebt Endress+Hauser eine Produktentwicklung gemeinsam mit den beteiligten KMUs Telocate und dotscene an. Dabei soll eine Übertragbarkeit auch auf andere Marktsegmente gewährleistet werden. Diese wird bereits in der Konzeptphase berücksichtigt und während des Projekts mehrfach evaluiert. Als weltweit tätiges Sensor- und Automatisierungsunternehmen mit Kunden in der Chemie-, Pharma- und Lebensmittelindustrie besitzt E+H den Marktzugang für die weltweite Vermarktung der im Projekt ISA 4.0 entwickelten Technologie. Contact person: Wolfram Burgard | ||

| Completed Projects | ||

|

BrainLinks-BrainTools

BrainLinks-BrainTools is a Cluster of Excellence at the University of Freiburg funded within the framework of the German Excellence Initiative. It brings together members of the Faculties of Engineering, Biology and Medicine, as well as the University Medical Center and the Bernstein Center Freiburg. The scientific goal of BrainLinks-BrainTools is to reach a new level in the interaction between technical instruments and the brain, allowing them to truly communicate with each other. This requires the development of flexible yet stable, and adaptive yet robust applications of brain-machine interface systems. We are involved in several subprojects within the cluster:

Project Website Contact person: Wolfram Burgard | ||

|

NaRKo - Nachgiebige Serviceroboter für Krankenhauslogistik

(Compliant Service Robots for Logistics in Hospitals)

The NaRKo project aims at employing autonomous robots for delivery tasks as well as for guiding people to treatment rooms in hospitals. In populated environments, human safety is the foremost priority for robots operating autonomously. Hospitals pose special challenges for two reasons. First, the people encountered in hospitals might be injured, old or sick and therefore have an advanced need for cautious interaction. Second, medical emergencies demand medical staff to transport patients or rush through hospital hallways in an urgent manner. In the NaRKo project, we enhance autonomous navigation with force-compliance to addresses the special challenges posed by the hospital environment. We examine novel sensory concepts to allow for the robot to feel interaction forces during autonomous operation. Thus, the robot can promptly react to collisions with static obstacles or with people, which can never be fully ruled out in a dynamically changing environment. Furthermore, physical contact with the robot can be used as a means of intuitive interaction. Medical staff or patients can simply push the robot out of their way if it blocks their path. In addition, the NaRKo project examines how the robot can use physical contact during navigation, for example by applying gentle pushing forces to people in very crowded areas, where collision free paths can not be found with classic motion planning algorithms. In addition to force sensing and compliant robot navigation, we use visual perception for detection and tracking of humans and dynamic objects and we improve existing methods for situation analysis to enable safe and successful autonomous operation in the hospital environment. Contact person: Wolfram Burgard, Tobias Schubert, Marina Kollmitz | ||

|

EUROPA2 - European Robotic Pedestrian Assistant 2.0

Urban areas are highly dynamic and complex and introduce numerous challenges to autonomous robots. They require solutions to several complex problems regarding the perception of the environment, the representation of the robot's workspace, models for the expected interaction with users to plan actions, state estimation as well as the states of all dynamic objects, the proper interpretation of the gathered information including semantic information as well as long-term operation. The goal of the EUROPA2 project, which builds on top of the results of the successfully completed FP7 project EUROPA, is to bridge this gap and to develop the foundations for robots designed to autonomously navigate in urban environments outdoors as well as in shopping malls and shops, for example, to provide various services to humans. Based on the combination of publicly available maps and the data gathered with the robot's sensors, it will acquire, maintain, and revise a detailed model of the environment including semantic information, detect and track moving objects in the environment, adapt its navigation behavior according to the current situation and anticipate interactions with users during navigation. A central aspect in the project is life-long operation and reduced deployment efforts by avoiding to build maps with the robot before it can operate. EUROPA2 is targeted at developing novel technologies that will open new perspectives for commercial applications of service robots in the future. Project Website Contact person: Wolfram Burgard, Michael Ruhnke | |

|

Flourish

To feed a growing world population with the given amount of arable land, we must develop new methods of sustainable farming that increase yield while minimizing chemical inputs such as fertilizers, herbicides, and pesticides. Precision agricultural techniques seek to address this challenge by monitoring key indicators of crop health and targeting treatment only to plants or infested areas that need it. Such monitoring is currently a time consuming and expensive activity. There has been great progress on automating this activity using robots, but most existing systems have been developed to solve only specialized tasks. This lack of flexibility poses a high risk of no return on investment for farmers. The goal of the Flourish project is to bridge the gap between the current and desired capabilities of agricultural robots by developing an adaptable robotic solution for precision farming. By combining the aerial survey capabilities of a small autonomous multi-copter Unmanned Aerial Vehicle (UAV) with a multi-purpose agricultural Unmanned Ground Vehicle (UGV), the system will be able to survey a field from the air, perform targeted intervention on the ground, and provide detailed information for decision support, all with minimal user intervention. The system can be adapted to a wide range of farm management activities and different crops by choosing different sensors, status indicators and ground treatment packages. The gathered information can be used alongside existing precision agriculture machinery, for example, by providing position maps for fertiliser application. Project Website Contact person: Wolfram Burgard, Christian Dornhege | |

|

HYBRIS - Hybrid Reasoning for Intelligent Systems

Knowledge Representation and Reasoning (KR&R) and, in particular, reasoning about actions, their effects and the environment in which actions take place, is fundamental for intelligent behaviour and has been a central concern in Artificial Intelligence from the beginning. To date, most KR&R approaches have focused on qualitative representations, which contrasts with requirements from many application domains where quantitative information needs to be processed as well. Examples of such quantitative aspects are time, probabilistic uncertainty, multi-criteria optimization, or resources like mass. We are involved in the subproject HYBRIS-C1. The purpose of this project is to combine high-level and low-level reasoning techniques for mobile manipulation tasks. We are developing planners that reason about the information the robot needs in order to solve a task. We therefore combine planning, active perception, and manipulation to solve tasks like searching for and fetching a specific object. Project Website Contact person: Wolfram Burgard, Nichola Abdo | ||

|

ALROMA - Autonomous Active Object Learning Through Robot Manipulation

Service robots will be ubiquitous helpers of our everyday life. They are envisioned to perform a variety of tasks ranging from cleaning our apartments to mowing our lawn or feeding our pets. For solving such tasks successfully, robots must be able to properly reason about and manipulate objects, which requires rich knowledge about the corresponding objects. To obtain such knowledge, robots need not only means for analyzing the geometry and appearance of objects, but also for retrieving their categories and inferring their characteristics. In this project, we aim at actively learning all the object information with minimal human supervision by leveraging the possibility of physical robot-object interaction and by analyzing the information available in the World Wide Web. The robot will actively learn the objects by testing estimated hypotheses through the interaction with the environment, e.g., by taking object data or by pushing or picking an object. In a similar manner, the robot will improve its skills from the experience gathered through the interaction with the objects. It will learn novel skills for manipulating the objects from demonstrations, which it generalizes and improves for similar objects and situations. ALROMA is part of the DFG Priority Programme 1527 "Autonomous Learning" with more than 50 scientists from the fields of machine learning, robotics and neurosciene participating. Project Website Contact person: Wolfram Burgard, Andreas Eitel | |

| iVIEW - intelligente vibrotaktil induzierte erweiterte Wahrnehmung (Extended cognition by intelligent vibrotactile stimulation) Visually impaired or blind people have a reduced ability to perceive their environment. Additional appliances such as canes extend their access to the surrounding, but at the downside of a drastically constrained perception radius. Devices equipped with ultrasound sensors reduce collisions but do not solve the problem of protecting the upper parts of the body. Furthermore, most available assisting systems use the auditory channel for information transfer. This is not desirable for visually impaired persons as acoustics play an important role in their process of acquiring spatial orientation. The iVIEW project pursues fast 3D obstacle detection in conjunction with a priority based information reduction as used in autonomous mobile robotics to generate a virtual representation of the surrounding. This information is transferred to the cognitive system of the human via the stimulation of skin receptors. Elaborate training schemes will be established to achieve a substitute spatial perception for blind and non-impaired people. The project is funded by the German Federal Ministry of Education and Research (Bundesministerium für Bildung und Forschung). Contact person: Wolfram Burgard, Knut Möller | |

|

LIFENAV

LifeNav - Reliable Lifelong Navigation for Mobile Robots is a project funded by the European Commission within FP7. The goal of the LIFENAV project is to develop the foundations to design mobile robot systems that can reliably operate over extended periods of time in complex and dynamically changing environments. To achieve this, robots need the ability to learn and update appropriate models of their environment including the dynamic aspects and to effectively incorporate all the information into their decision-making processes. Project Website Contact person: Wolfram Burgard, Bastian Steder | |

|

| ROTAH – Robot Task Learning by Active Tracking of

Hands This project desires to develop a system which enables a robot to learn tasks by observing a human user interacting with objects. With current techniques, a robot learns a task such as moving a bottle from the shelf to the table, by using kinesthetic training, manual scripting, or lengthy programming procedures. This project aims for a system that will render the teaching process easy also to the non-expert. It emphasizes the idea of learning-through-observation in a largely unconstrained environment. The robot observes the actions of the human operator by tracking their hands and by automatically detecting and tracking the manipulated object. From this data, the robot will learn a model to repeat the task in an unsupervised way. To this end, new methods from computer vision as well as robot learning and manipulation will be developed, and the performance of existing methods will be improved. The project is funded by the Baden-Württemberg Stiftung gGmbH. Contact person: Wolfram Burgard, Thomas Brox, Luciano Spinello, Gian Diego Tipaldi, Andreas Lars Wachaja | |

|

RobDREAM

The basic principle behind the RobDREAM action is the observation that sleep does not only serve regeneration purposes, but positively affects working memory and thereby improves higher-level cognitive functions such as decision making and reasoning. In RobDREAM we will enable robots to enhance their capabilities in their inactive phases by processing experiences made during the working day and by exploring - or, dreaming of possible future situations and how to solve the challenges in these situations best. We will improve industrial mobile manipulators’ perception, navigation as well as manipulation and grasping abilities by automatic optimisation of parameters, strategies and selection of tools within a portfolio of key algorithms by means of learning and simulation, and through use-case driven evaluation. Project Website Contact person: Wolfram Burgard | |

| STAMINA Part handling during the assembly stages in the automotive industry is the only task with automation levels below 30% due to the variability of the production and to the diversity of suppliers and parts. The full automation of such task will not only have a huge impact in the automotive industry but will also act as a cornerstone in the development of advanced mobile robotic manipulators capable of dealing with unstructured environments. Such automation will opening new possibilities in general for manufacturing SME's. The STAMINA project will use a holistic approach by partnering with experts in each necessary key fields, thus building on previous R&D to develop a fleet of autonomous and mobile industrial robots with different sensory, planning and physical capabilities for jointly solving three logistic and handling tasks: De-palletizing, Bin-Picking and Kitting. The robot and orchestration systems will be developed in a lean manner using an iterative series of development and validation testes that will not only assess the performance and usability of the system but also allow goal-driven research. STAMINA will give special attention to the system integration promoting and assessing the development of a sustainable and scalable robotic system to ensure a clear path for the future exploitation of the developed technologies. In addition to the technological outcome, STAMINA will allow to give an impression on how a sharing of work and workspace between humans and robots could look in the future. Project Website Contact person: Wolfram Burgard, Gian Diego Tipaldi, Luciano Spinello | |

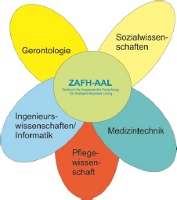

| ZAFH-AAL ZAFH-AAL – Zentrum für Angewandte Forschung an Hochschulen für Ambient Assisted Living (Collaborative Center for Applied Research on Ambient Assisted Living) The target of this project is the development of systems and technologies which allow elderly and disabled people to be independent and help them in sustaining social relationships with others. The project is motivated by current demographic developments, considering the need of the growing group of elderly people for an extended self-determined lifestyle in their accustomed environment. The systems and technologies created in this project will be an interdisciplinary cooperation under consideration of gerontological and socio-scientific aspects. The project is funded by the Baden-Württemberg Ministry of Science, Research and the Arts (Ministerium für Wissenschaft, Forschung und Kunst). Project Website Contact person: Wolfram Burgard, Christophe Kunze | |

|

SFB/TR-8 - Spatial Cognition

Spatial Cognition is concerned with the acquisition, organization, utilization and revision of knowledge about spatial environments, be it real or abstract, human or machine. Research issues range from the investigation of human spatial cognition to mobile robot navigation. The goal of the SFB/TR 8 is to investigate the cognitive foundations for human-centered spatial assistance systems. The SFB/TR 8 Spatial Cognition comprises several projects which are structured into the three research areas Reasoning, Action, and Interaction. Reasoning projects are concerned with internal and external representations of space and with inference processes using these representations. Action projects are concerned with the acquisition of information from spatial environments and with actions and behavior in these environments. Interaction projects are concerned with communication about space by means of language and maps. Project Website Contact person: Wolfram Burgard, Cyrill Stachniss | |

| TAPAS The goal of TAPAS is to pave the ground for a new generation of transformable solutions to automation and logistics for small and large series production, economic viable and flexible, regardless of changes in volumes and product type. TAPAS pioneers and validates key components to realize this vision: mobile robots with manipulation arms will automate logistic tasks more flexible and more complete by not only transporting, but also collecting needed parts and delivering them right to the place where needed. TAPAS robots will even go beyond moving parts around the shop floor to create additional value: they will automate assistive tasks that naturally extend the logistic tasks, such as preparatory and post-processing works, e.g., pre-assembly or machine tending with inherent quality control. TAPAS robots might initially be more expensive than other solutions, but through this additional creation of value and by a faster adaptation to changes with new levels of robustness, availability, and completeness of jobs TAPAS robots promise to yield an earlier return of investment. Project Website Contact person: Wolfram Burgard, Gian Diego Tipaldi | |

|

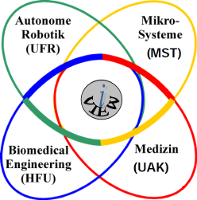

Embedded Microsystems

In the research prospects 2000+ the Max Planck Society states: "In the future, numerous objects of our daily life will contain embedded microsystems with interfaces to people and wireless connections to other objects. This requires reliable, highly meshed systems that will exceed the dimension of what we know today from the World Wide Web" - Applications in medical diagnostics, instrumented homes, manufacturing environments, and vehicles among others demand a systematic development of methods for the design and the secure operation of such embedded microsystems as well as the education of highly qualified scientists in this area. The PhD program "Embedded Microsystems" at the University of Freiburg strives for this goal. Project Website Contact person: Wolfram Burgard |

|

|

EUROPA - European Robotic Pedestrian Assistant

In the field of robotics, there has recently been a tremendous progress in the development of autonomous robots that offer various services to their users. Typical services include support of elderly people, cleaning, transportation and delivery tasks, exploration of unaccessible hazardous environments, or surveillance. Most of the systems developed so far, however, are restricted to indoor scenarios, non-urban outdoor environments, or road usage with cars. There is serious lack of capabilities of mobile robots to navigate safely in highly populated outdoor environments. This ability, however, is a key competence for a series of robotic applications. The goal of the EUROPA project is to bridge this gap and to develop the foundations for service robots designed to autonomously navigate in urban environments outdoors as well as in shopping malls and shops to provide various services to users including guidance, delivery, and transportation. EUROPA will develop and apply sophisticated probabilistic scene interpretation techniques to deal with the unpredictable and changing environments. Based on data gathered with its sensors, the robot will acquire a detailed model of the environment, detect and track moving objects in the environment, adapt its navigation behavior according to the current situation, and communicate with its users in a natural way, even remotely. Key innovations of the project are made by robustly and reliable addressing the autonomous navigation problem in complex and populated environments, the ability to build appropriate spatial models, and by reasoning about them based on the verbal and natural interaction with users. EUROPA is targeted at developing novel technologies that will open new perspectives for commercial applications of service robots in the future. Project Website Contact person: Wolfram Burgard | |

|

muFly

Autonomous micro flying robots combine a large variety of technological challenges and are therefore an excellent showcase for leading edge micro/nano technologies and their integration with information technology towards a fully operational intelligent micro-system. This project proposes, therefore, the development and implementation of the first fully autonomous micro helicopter comparable in size and weight to a small bird. The key challenges of the project include innovative concepts for power sources, sensors, actuators, navigation and helicopter design and their integration into a very compact system. The envisaged fully autonomous micro-helicopter will weight less than 30g and measure only 10cm in diameter. The project shall develop and demonstrate innovative approaches and technologies in: (1) system level design and optimization of autonomous micro aerial vehicles, (2) multifunctional use of components (integration of camera and distance sensor, batteries doubling as structural elements, or a propeller used for gyroscopic stabilization), (3) design of ?smart? miniature inertial sensors and omnidirectional vision sensors with polar pixel arrangement, (4) miniaturized fuel-cells, (5) miniaturized piezoelectric actuators with enhanced power to weight ratios, and (6) control and navigation concepts that can cope with limited sensor and processing performance. Project Website Contact person: Wolfram Burgard, Giorgio Grisetti |

|

|

INDIGO - Interaction with Dialogue Enabled Robots

The goal of INDIGO is to develop technology that will facilitate the advancement of human-robot interaction. This will be achieved both by enabling robots to perceive natural human behaviour as well as by making them act in ways that are familiar to humans. Besides from the capability of recognizing and producing human speech and facial expressions, the robots will be able to navigate and maneuver in a socially compatible way. This comprises following and guidance behaviours as well as adapting and imitating human motion patterns, e.g. to avoid collisions. The final system will deployed in the premises of a museum to work as a robotic tour guide. Project Website Contact person: Wolfram Burgard, Kai Arras |

|

|

RAWSEEDS

The aim of the Rawseeds Project is to stimulate and support progress in autonomous robotics by providing a comprehensive, high-quality Benchmarking Toolkit. The absence of standard benchmarks is a widely acknowledged problem in the robotics field, and is doubly harmful to it: firstly, because it prevents recognition of scientific and technical progress, thus discouraging research and development; and secondly, because it prevents new actors (and particularly companies) from entering the robotic sector, as heavy investments are needed to compensate for that absence. Rawseeds datasets are sets of time-synced data streams, generated by the sensors aboard a robot platform when it moves through an environment. The datasets are gathered in real-world locations. The Rawseeds Benchmarking Toolkit is mainly targeted to the problems of localization, mapping and SLAM (i.e. Simultaneous Localization And Mapping) in robotics; but its use is not limited to them. It will be freely downloadable from this website, as soon as it is completed. By the way: "Rawseeds" means "Robotics Advancement through Web-publishing of Sensorial and Elaborated Extensive Data Sets". Project Website Contact person: Wolfram Burgard |

|

|

DESIRE - The German Service Robotics Initiative

The project aims at integrating leading edge technology in the field of service robotics and to develop an open, extensible system architecture. The project is funded by the German ministery of research. Project Website Contact person: Wolfram Burgard | |

|

Situation Recognition in Dynamic Multi-Agent Systems

Funded by the Siemens Corporate Technology, Siemens AG, Munich, Germany. The goal of this project is to develop a model for spatio-temporal situations using hidden Markov models based on relational state descriptions, which are extracted from the estimated state of an underlying dynamic system. Our model covers concurrent situations, scenarios with multiple agents, and situations of varying durations. Contact person: Wolfram Burgard |

|

|

CoSy - Cognitive Systems for Cognitive Assistants

The main goal of the EU project CoSy is to advance the science of cognitive systems through a multi-disciplinary investigation of requirements, design options and trade-offs for human-like, autonomous, integrated, physical (eg., robot) systems, including requirements for architectures, for forms of representation, for perceptual mechanisms, for learning, planning, reasoning and motivation, for action and communication. Project Website Contact person: Wolfram Burgard |

|

|

OpenSLAM - The resource for open source SLAM implementations. The simultaneous localization and mapping (SLAM) problem has been intensively studied in the robotics community in the past. Different techniques have been proposed but only a few of them are available as implementations to the community. The goal of OpenSLAM.org is to provide a platform for SLAM researchers which gives them the possibility to publish and promote their algorithms. Project Website Contact person: Cyrill Stachniss, Giorgio Grisetti |

|

|

GMapping GMapping is highly efficient Rao-Blackwellized particle filter for learning grid maps. The ideas are (1) to draw the particles from an improved proposal, computed according to the most recent observation, and (2) To resample whenever an indicator of the variance of the weights is below a given threshold. The first allows for drawing the particles in a close position to the true posterior, while the second reduces the particle uncertainly. The sourcecode as well as several corrected and uncorrected datasets are available. Project Website Contact person: Giorgio Grisetti, Cyrill Stachniss |

|

|

TORO - Tree-based netwORk Optimizer

TORO is an optimization approach for constraint-network. It provides a highly efficient, gradient descent-based error minimization procedure and one of the fastest methods so far (2007). In 2006, Olson et al. presented a novel approach to solve the graph-based SLAM problem by applying stochastic gradient descent to minimize the error introduced by constraints. TORO is an extension of Olson's algorithm. It applies a tree parameterization of the nodes in the graph that significantly improves the performance and enables a robot to cope with arbitrary network topologies. The latter allows us to bound the complexity of the algorithm to the size of the mapped area and not to the length of the trajectory. Project Website Contact person: Giorgio Grisetti, Cyrill Stachniss |

|

|

CARMEN - The Carnegie Mellon Robot Navigation Toolkit

CARMEN is an open-source collection of software for mobile robot control. CARMEN is modular software designed to provide basic navigation primatives including: module communication infrastructure, base and sensor control, obstacle avoidance, localization, path planning, and mapping. Project Website Contact person: Cyrill Stachniss |

|

|

SmartTer - Smart All Terrain Vehicle

This project is a cooperation between the EPLF Lausanne (now ETH Zurich) and the University of Freiburg. The goal is to build an automous car based on a Smart. We participated in the European Land Rover Trials (ELROB). Project Website |

|

|

WebFAIR - Web Access to Commercial Fairs Through Mobile Agents

The EU funded project WebFAIR addresses the marketing and promotion requirements of large commercial exhibitions by providing broad access to information, services and commodities exhibited at the event. Essentially, WebFAIR aims at providing the means to remote corporate and private users for active and personalised workplace exploration and information visualisation for commercial purposes. Project Website Contact person: Wolfram Burgard |

|

|

TOURBOT - Interactive Museum Tele-presence through Robotic Avatars

The goal of TOURBOT is the development of an interactive tour-guide robot able to provide individual access to museums' exhibits and cultural heritage over the Internet. TOURBOT operates as the user's avatar in the museum by accepting command over the web that direct it to move in its workspace and visit specific exhibits; besides, TOURBOT can also act as a flexible, on-site museum-guide. More specifically, the objectives of the project are the following: (1) develop a robotic avatar with advanced navigation capabilities that will be able to move (semi)autonomously in the museum's premises. (2) develop appropriate web interfaces to the robotic avatar that will realize distant-user's telepresence, i.e facilitate scene observation through the avatar's eyes, (3) facilitate personalized and realistic observation of the museum exhibits, and (4) enable on-site, interactive museum tour-guides. Project Website Contact person: Wolfram Burgard |

|

|

NURSEBOT - Robotic Assistants for the Elderly

We have succeeded in helping people to live longer. Now we need to help them to live better. The project PERSONAL ROBOTIC ASSISTANTS FOR THE ELDERLY is an inter-disciplinary multi-university research initiative focused on robotic technology for the elderly that brings together researchers from the University of Pittsburgh and Carnegie Mellon University. The goal of our project is to develop mobile, personal service robots that assist elderly people suffering from chronic disorders in their everyday life. We are currently developing anautonomous mobile robot that "lives" in a private home of a chronically ill elderly person. The robot provides a research platform to test out a range of ideas for assisting elderly people. Project Website Contact person: Wolfram Burgard |

|

|

Rhino - The Interactive Museum Tour-guide Robot

The "Deutsches Museum Bonn"(German Museum Bonn) is the first museum for contemporary technology in Germany. This year, the "Deutsches Museum Bonn" contributes for the first time to the "Museumsmeilenfest" of the city of Bonn. The "Museumsmeilenfest", which takes place May 29 to June 1, is a great cultural event, at which the major museums in Bonn offer special exhibitions and tours. It is surrounded by many cultural attractions in the city of Bonn. Visitors of the "Deutsches Museum Bonn" will have the opportunity to be shown through the museum by a MOBILE ROBOT. During the "Museumsmeilenfest" visitors can directly participate in several tours, guided by the mobile robot "RHINO". RHINO will explain what the people see, and upon request provide in-depth information concerning individual exhibits. Project Website Contact person: Wolfram Burgard |

|

|

Minerva - Carnegie Mellon's Robotic Tourguide Project

Minerva is a talking robot designed to accommodate people in public spaces. She perceives her environment through her sensors (cameras, laser range finders, ultrasonic sensors), and decides what to do using her computers. Minerva actively approaches people, offers tours, and then leads them from exhibit to exhibit. When Minerva is happy, she sings and smiles at nearby people. But don't block her way too often--otherwise, she'll become frustrated and might frown at you and honk her horn! The goal of the Minerva project is to bring robots closer to people. Recent progress in robotics and artificial intelligence has made it possible to build interactive mobile robots that operate highly reliably in crowded environments. In the next decade, robots like Minerva are expected to become part of many people's lives, where they will assist them in their everyday activities, perform janitorial services, or simply entertain them. This project is carried out jointly by Carnegie Mellon University's Robot Learning Laboratory and the University of Bonn's Computer Science Department III, and sponsored by the Lemelson Center at the National Museum of American History. Minerva is a next generation robotic tourguide, built by the same group that developed the world's first robotic museum tourguide Rhino, displayed in the Deutsches Museum Bonn. Project Website Contact person: Wolfram Burgard |

|

|

The Mobile Robot Rhino

The RHINO-project is a joint project between the "Institut für Informatik III" of the Rheinische Friedrich-Wilhelms-Universität Bonn and the Carnegie Mellon University (USA). The central scientific goal of the RHINO project is the analysis and synthesis of computer software that can learn from experience. The team believes that an essential aspect of future computer software will be the ability to flexibly adapt to changes, without human intervention. The ability to learn will soon enable robots like RHINO to perform complex tasks, such as transportation and delivery, tours through buildings, cleaning, inspection, and maintenance. Project Website Contact person: Wolfram Burgard |

|